New Haptic Hardware Offers a Waterfall of Immersive Experiences for Gamers

Immersive gaming experiences are currently running full throttle, with stunning visuals, spatial 3D sound, and various haptic devices combining to make the virtual world bigger, badder—and at times—more beautiful than real life.

Haptic technologies in gaming—which create the experience of touch—often rely upon the dynamic of force feedback, involving pressure from a mechanical device that offers users real-time sensations. Examples might include vibrations in your gaming chair from a nearby explosion, or increased difficulty in navigating a joystick due to G-forces while steering your Formula 1 racer through a tight turn.

University of Maryland researchers are now offering a new take on force feedback, delivering lifelike haptic experiences with controlled water jets that stream against your skin, all while keeping you and your surroundings as dry as an arid desert.

Their work, “JetUnit: Rendering Diverse Force Feedback in Virtual Reality Using Water Jets,” was recently presented and demoed at the Association for Computing Machinery’s User Interface Software and Technology Symposium in Pittsburgh.

The UMD device offers a wide range of haptic experiences, from subtle sensations that mimic a gentle touch to intense impacts that feel as realistic as a needle injection.

User testing has demonstrated that JetUnit is successful at creating diverse haptic experiences within a virtual reality narrative, with participants reporting a heightened sense of realism and engagement.

The key to this unparalleled sensory experience is a thin, watertight membrane made of nitrile—the same synthetic rubber used for sterile gloves—attached to a compact, self-contained chamber that effectively isolates the water from the user.

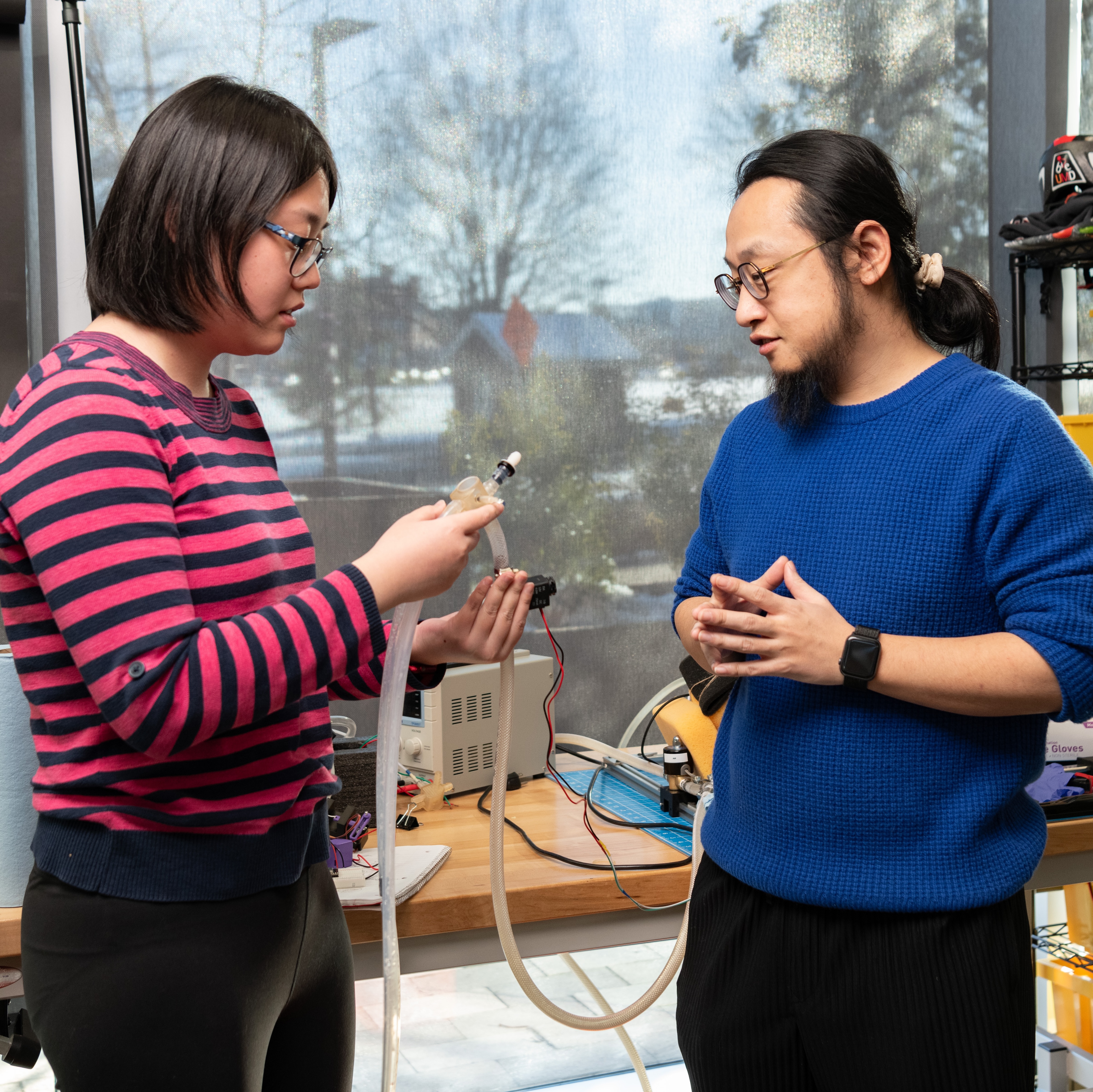

Zining Zhang (left in photo), is a third-year doctoral student in computer science working in the Small Artifacts (SMART) Lab, and the lead researcher on the project. She says that the UMD team decided to try using contained water jets due to water’s efficiency in energy transfer as an incompressible fluid.

But achieving a full range of forces while keeping users dry presented a significant hurdle, and there was quite a bit of work in engineering the total system that includes a sediment filter, main pump, solenoid valve, recycling pump, control circuit and water tank.

Zhang says she received significant feedback as the project progressed from her adviser, Huaishu Peng (right in photo), an assistant professor of computer science; as well as fellow SMART Lab members Jiasheng Li, a fourth-year doctoral student in computer science, and Zeyu Yan, a sixth-year doctoral student in computer science.

Other help on the project came from Jun Nishida, an assistant professor of computer science and new faculty member at UMD who is active in engineering novel on-body interfaces to create new embodiment, perceptions, and communication channels in people wearing the devices.

Both Peng and Nishida have appointments in the University of Maryland Institute for Advanced Computer Studies, which Zhang credits with providing a “rich, collaborative environment” for the team to do their work.

The Singh Sandbox makerspace and its manager Gordon Crago also assisted, providing an array of tools and lighting hardware as the team was developing the JetUnit prototype.

Zhang believes JetUnit’s potential extends beyond immersive, suggesting it might one day aid blind users by providing force feedback cues for spatial navigation and other interactions, thus enhancing accessibility.

Looking ahead, she envisions integrating thermal feedback and expanding JetUnit to a full-body haptic system that covers a larger area, paving the way for even broader applications.

—Story by Melissa Brachfeld, UMIACS communications group